Lessons learned from a link audit and disavow for 100 million backlinks.

Win with aggregated link data from Google Search Console

Are you using link data from Google Search Console?

You don't get back a lot of data usually from Google (surprise?), so even experienced SEO people discard this option. They often simply don't want to go down the route to manually download the file and process it further.

LRT Certified Xpert Kamil Guzdek has shown on this 100M+ backlink site that

- weekly GSC downloads

- via multiple GSC properties

- over the course of half a year

leads to exciting results.

Learn all about Kamil's experience with this monster domain, and how you would NOT go crazy benefiting from the same method.

Enjoy & Learn

Christoph C. Cemper

PS: Did you know? Luckily that downloading was automated by LRT many years ago.

A monster domain with 100M backlinks. Does Google take care of it?

A couple of months ago, I finished evaluating backlinks for one of my customers.

exit

This was the single biggest link audit I have performed to date - evaluating over 100 million backlinks leading to a single domain.

Today, I will share with you three insights which helped me tremendously in recovering this domain.

I know that managing your SEO is not an easy task. You must constantly monitor your performance, optimize your website, improve your content and get new links.

Happily, for a couple of years now, you do not have to evaluate your links, right?

Google said so, and many SEO agencies still reiterate this.

It all started in 2016 with [John Mueller stating that]

"the large majority of websites don't need to do anything with disavow. Because (...) we're able to pick up normal issues anyway and we can take care of that". He referred to the "normal small business websites", whatever that is. It didn't sound like he meant websites that engage in competitive landscapes, with SEOs that engage in link building, outreach and other tactics are maybe a bit more risky than "doing nothing with links".

But then something else happened in January 2019 - the very same John Mueller said that you should be checking your incoming backlinks, and that it is your responsibility.

https://twitter.com/JohnMu/status/1081221341389430784

At this point, I was very happy and felt confirmed.

As I always say that you should be downloading your links from Google Search Console periodically if you see 150k lines in your files, but let's not jump ahead!

1. Set up your Google Search Console the right way

Most website owners who I know make a very simple mistake.

They know they need to verify their domain in Google Search Console to get information about the links which Google sees, and to see their organic stats. However, they verify only one version of their domain.

Let us say you just registered example.com.

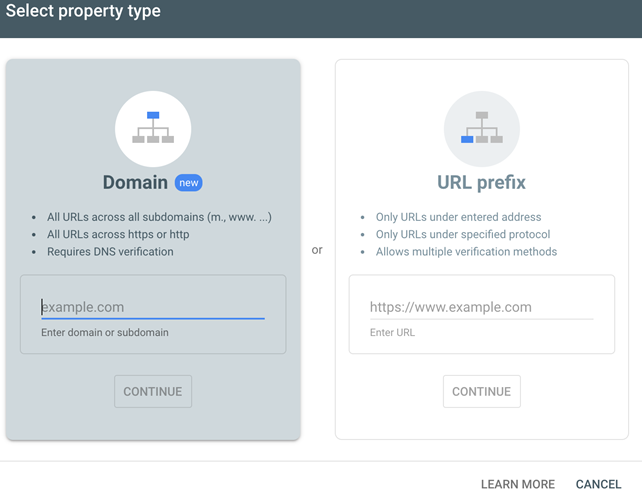

In Google Search Console, you will be given the possibility to register on the Domain level, or on he URL prefix level.

This means that if you verify:

Verify On the URL prefix level

Verify On the URL prefix level - you will see all of the links that start with the prefix you provided.

Most website owners do just that. They verify http://example.com (or https://www.example.com) and call it a day.

This option, however, is so much more powerful. Think about it - wouldn't it be nice to see all the traffic and links that get to your domain, but via a false protocol, or wrong subdomain?

There are some restrictions, however. This option means that you'll see all the data for URLs containing the string that you enter.

So for example - if you verify https://www.example.com, you'll see data for:

- Home page (https://www.example.com/)

- https://www.example.com/directory

- https://www.example.com/directory/page

- and so on...

But you won't see:

- https://example.com/

- https://www.example.com/

- http://m.example.com

- https://m.example.com

Verify On the Domain level

On the Domain level - you'll see all the data within this particular domain.

So verifying example.com on the Domain level will mean that you'll see data for:

- www.example.com

- m.example.com

- example.com

and all other subdomains, pages and directories, for both http:// and https:// that belong to this domain.

That's great, but there's a catch - when you want to download backlinks from Google, there's a limit. It doesn't matter how many backlinks you have, you'll be able to download a sample of only around 150,000 - provided that you have so many links in your profile.

If your domain is small, and has less than 200,000 backlinks, the Domain level verification should do the trick.

But what if you have 1,000,000 or even 100,000,000 backlinks?

You must diversify!

Note from Christoph

Another issue -- you can only upload to the Disavow Tool in URL prefix mode. That means, if you only have the Domain level verified, you won't be able to use the Disavow Tool at all. Some people saw this as a reason for the disavow tool supposedly not being relevant anymore. That's wrong. However, the most logical reason for Google to not implement this, is the potential damage you could do with a disavow on domain property. The impact level is just even higher than on URL property level.

How do I get more backlinks from Google Search Console?

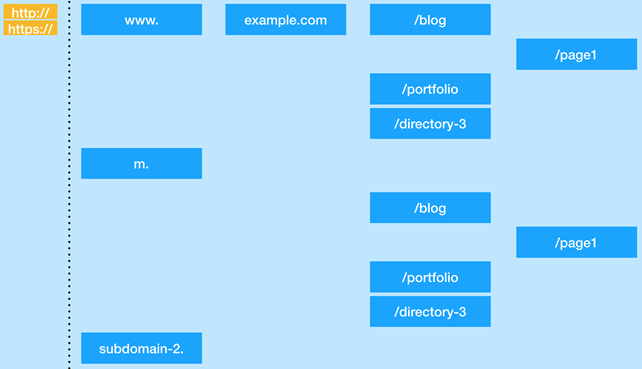

Let's consider the following setup.

We have:

- 1 domain (example.com),

- 3 directories (blog, portfolio, directory-3),

- 1 blog post (page1),

- 3 subdomains (www, m - mobile version, subdomain-2),

- and we also need to remember that there are two protocols in use - http and https.

First, we need to verify on the URL prefix level our main version of the domain.

Let's say we have an SSL certificate, in which case we would verify https://www.example.com.

Remember also to verify http://www.example.com, http://example.com and https://example.com.

It's often the case that your links and traffic don't lead to the primary version that you chose, even if you have all of the redirects in place.

Now, you would need to use your better judgment - if any part of your domain is very famous in the internet, and has many backlinks pointing to it, I would suggest verifying all of those as well.

On the other hand, if you don't know, it's better to add at least all of your 1st tier directories as

well.

Here is some good news - often these are new folders, that only ever existed as this variation. So in many cases, here you would need to verify only your primary address. No http, http non-www, https non www.

So, in our case that would mean:

- https://www.example.com/blog

- https://www.example.com/portfolio

- https://www.example.com/directory3

Additionally, if you know any particular page - for example a blogpost - that generates tons of links overtime, it would also be wise to add it, although there are very few cases that would really benefit from this. If your single blog post does not get over 100,000 backlinks, I wouldn't worry ;)

As a next step, we would need to take care of all of the subdomains.

As our case has a separate mobile-friendly subdomain - hosting all the same content - it would be wise to add all of those as well:

- http://m.example.com

- https://m.example.com

- https://m.example.com/blog

- https://m.example.com/portfolio

- https://m.example.com/directory-3

Also, we have subdomain-2, which would also require adding, in both the http:// and https:// versions.

Finally, to tackle all of the links from subdomains or directories that we might have missed, you should also verify at the Domain level.

If we were to list all of the verified properties for our setup, they would be:

- http://example.com

- https://example.com

- http://www.example.com

- https://www.example.com

- https://www.example.com/blog

- https://www.example.com/portfolio

- https://www.example.com/directory-3

- https://m.example.com

- http://m.example.com

- https://m.example.com/blog

- https://m.example.com/portfolio

- https://m.example.com/directory-3

- http://subdomain-2.example.com

- https://subdomain-2.example.com

- sc-domain:example.com (this is a Domain level verified property)

Additionally - depending on your situation - you also might consider adding:

- http://example.com/blog

- http://example.com/portfolio

- http://example.com/directory-3

- http://www.example.com/blog

- http://www.example.com/portfolio

- http://www.example.com/directory-3

- http://m.example.com/blog

- http://m.example.com/portfolio

- http://m.example.com/directory-3

Don't get me wrong, however. The fact that we just verified 15 versions of your domain doesn't mean that you'll get 15 times more links. Most of the time, around 80-90% of those files will have the same links, but the difference is what makes this worthwhile!

It may not seem like a lot - but remember that you should download your links from GSC regularly.

2. Download link files from your Search Console Weekly

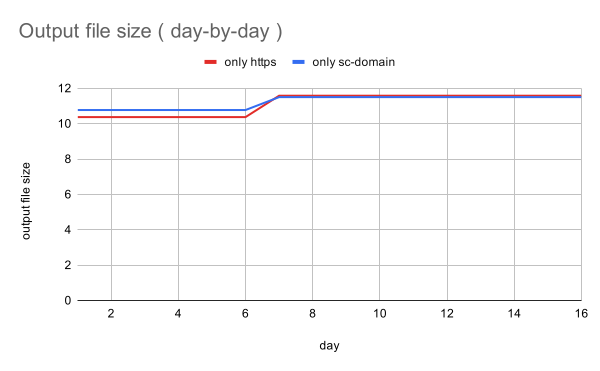

I did a simple test.

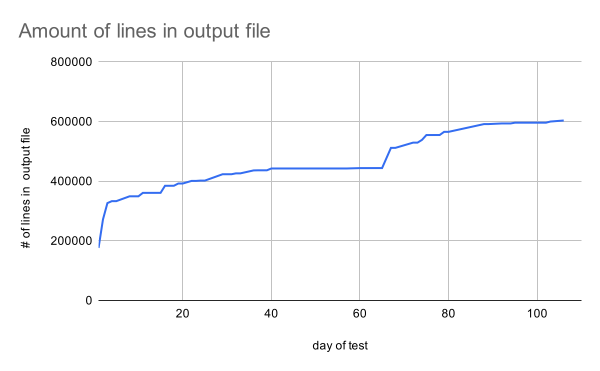

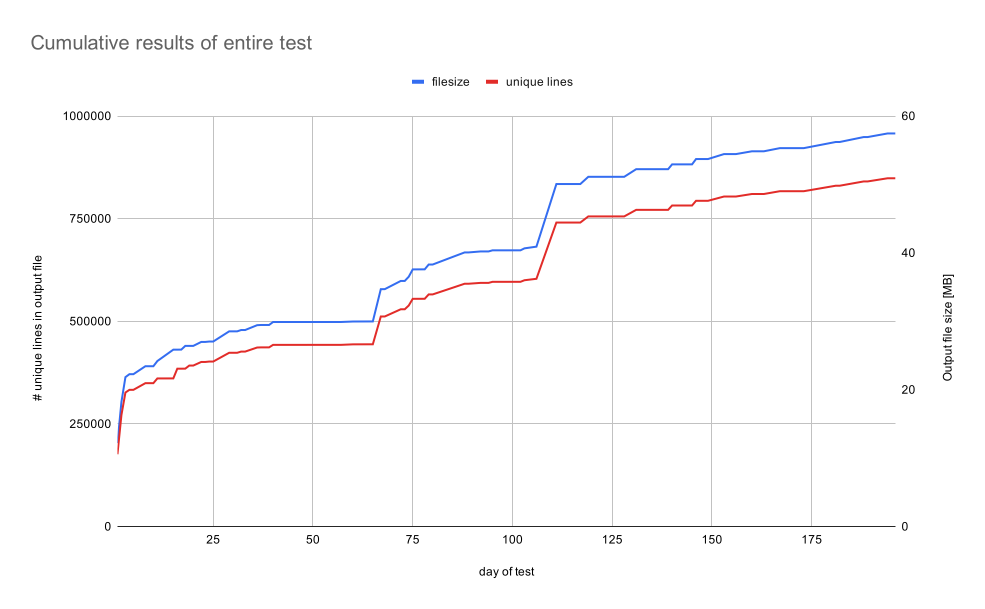

Over the course of 192 days, I downloaded backlinks from Google Search Console.

At that point in time, I had verified my customer's domain on the Domain level, but also an https://www version on the URL prefix level.

For 106 days, I downloaded from Search Console the "latest links" and "more sample links" for the sc-domain property.

Then, using Command Line Interface in Google Cloud, I simply deleted the second column (as one of the files had a discovery date in the second column - irrelevant in building a link profile), merged the files and then exported the unique lines to an output file.

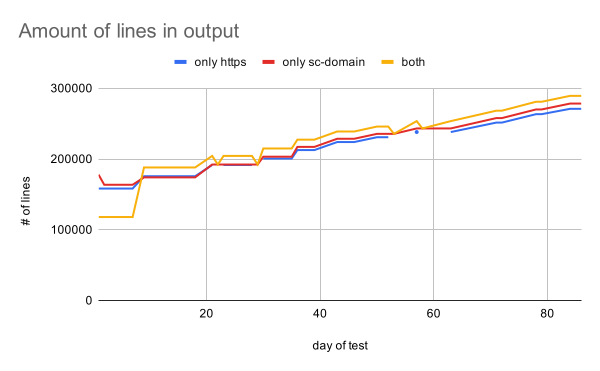

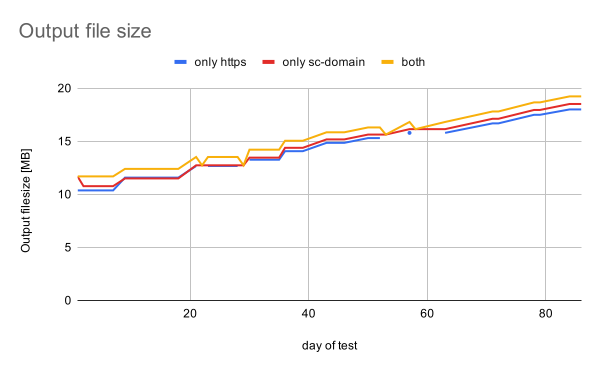

Then, for another 86 days, I started to also download link data for the https://www URL prefix property, and I kept creating the output file with CLI.

How often does Google update links in Search Console?

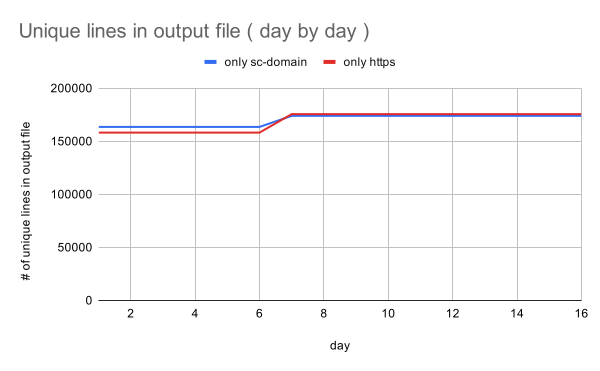

To answer this question, I downloaded and concatenated files from Search Console daily, for 16 days, both for the https://www and sc-domain versions.

Over the course of this time, you can see there is only one peak in the output file with unique backlinks.

This would mean that as Google updates links in those files once a week, there's no need to download those files more frequently, so from this moment onwards, I downloaded the files once every week for the next 25 weeks.

How many backlinks can I get from Search Console?

If you are patient - you can get far more than the basic 150,000 you'll see for the first time.

The key to this is to download your files from Search Console for every property on a weekly basis. (Sounds crazy and undoable for you? Read below.)

The first file I downloaded had 176,000 lines. As you can see, just by downloading the same files from Google Search Console weekly, over the course of 3 months I increased our link profile three times.

This made me wonder - what if we started to add other URL prefix type properties?

I decided to extend the test by simply downloading links from the https://www property and then combining this with the sc-domain property and seeing what happens.

As you can see, even though there's a major overlap between the verified https://www property and the sc-domain, there's also around 7-10% of uniqueness between the https and the sc-domain, hence the final output file is always a bit bigger.

The last thing I needed to do was to concatenate my data from the first 106 days (only the sc-domain) with the data from the last 86 days (https://www + sc-domain), and see what changed.

As you can see, by simply adding the primary URL type property (https://www version of my domain), in 6 months I went from 176,000 to 848,000 unique backlinks in my backlink profile. This is a growth of 480% in the amount of links to evaluate!

Now, you can imagine how many more backlinks I'd be able to collect if I downloaded on a weekly basis links from other URL prefix properties, such as http://, http://www, https:// and the properties set up for my most linked directories.

This way, if you keep doing this long enough, you're actually able to collect the link profile that Google has for your domain!

Always remember to assess the fullest Link Profile you can get.

It wouldn't make sense to evaluate only 100,000 backlinks if your domain has millions of them, right?

The more links that you can evaluate, the better for you!

3. Split your Disavow file

Once you've collected a bigger Link Profile file, import it to your Link Detox - that way, those links which Google shows you in Search Console could be evaluated as well against the same criteria you use for your normal analysis.

When you're done assessing the quality of your backlinks and creating your disavow file - check two things:

- Does your file have more than 100,000 lines?

- Is your Disavow.txt file bigger than 2 MB?

If to any of those questions you can answer yes, you will not be able to upload your disavow file in one piece.

You might try various tricks and waste a lot of time while trying to minify your file - assessing the links again to check if some of them could be disavowed as a domain, or even trying to select out "the worst of The Worst" ones.

But there is a simpler solution.

Remember my first tip to set up your Google Search Console the right way?

Now is the time to make some use of it.

As it turns out - you can upload your different disavow files to various URL prefix properties in your Search Console.

So, in our example, you could upload

- one disavow file to https://www.example.com/blog,

- another one to http://example.com,

- and another one to https://www.example.com/directory-3.

Splitting your single big disavow file still means there is some work ahead of you, but thanks to LinkResearchTools, we can make it relatively simple!

Find out how to split up the files

At this point, you should know which parts of your domain attract the most backlinks, we have defined that in the structure above already.

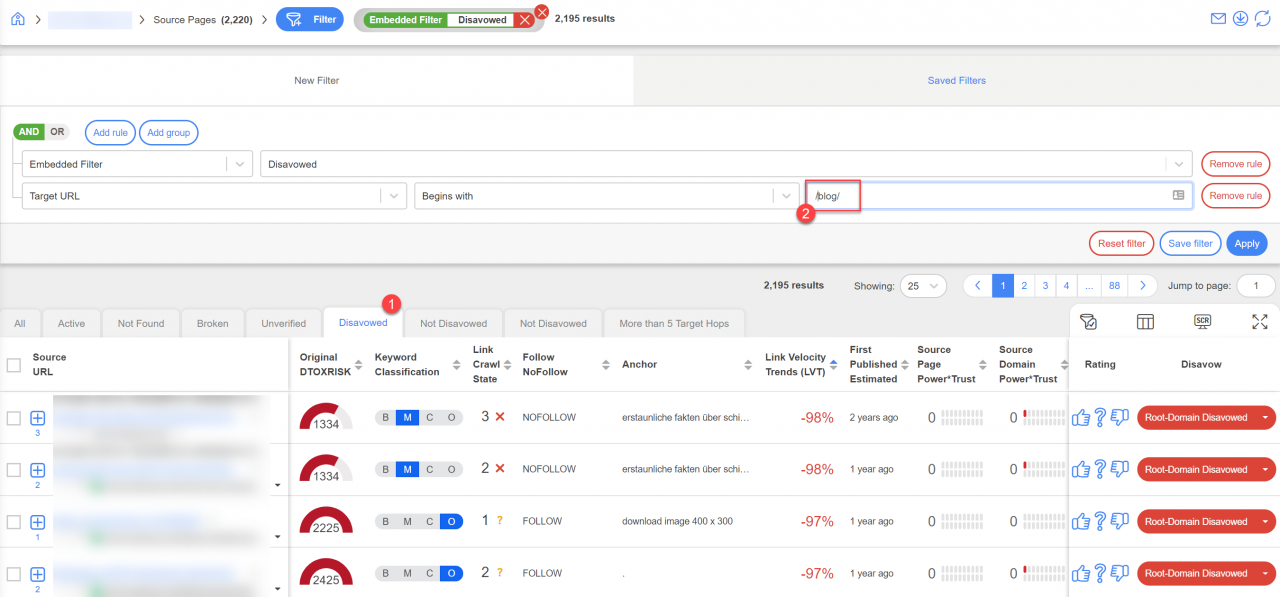

You can use a simply built-in filter tab for the Disavowed links 1 and then apply an additional rule for the field Target URL to match the folder.

Simply type in this filter those parts of your domain - being a directory or a subdomain, which have the most links.

In this example blog/ 2.

LRT currently only supports exporting the full disavow as that is the majority of users. And maybe they still hope for Google to lift that minuscule 2 mega byte limit?

From this point, I would suggest exporting the Excel files or CSV files of the data you see in the output, and working out your disavow file for a part of the domain from there.

Once you've sorted out the disavow files for the most heavily linked parts of your domain, all you need to do is go to a particular URL prefix property and upload your specific Disavow file for that property.

And once you have done that, don't forget to run the Link Detox Boost to speed up your recovery process!

Kamil Guzdek

How LinkResearchTools supports the described process

Some notes from the Team of LRT and Christoph.

- The good news first - all of the above is support fully automated since 2014 in LinkResearchTools and Link Detox.

- To download link data from Google Search Console in a regular frequency and from multiple Google Search Console properties was described by Bartosz Goralewicz in 2014 and LRT supports that feature since.

- Thus, the method to accumulate a maximum of link data Kamil explains here, is not new. However, we find it very interesting to see actual numbers from such a large domain published. It's also very good to see that little or actually nothing has changed since 2014. The new Domain property allows an extra "global" property, but for using the Disavow tool you still need to setup the unique URL properties.

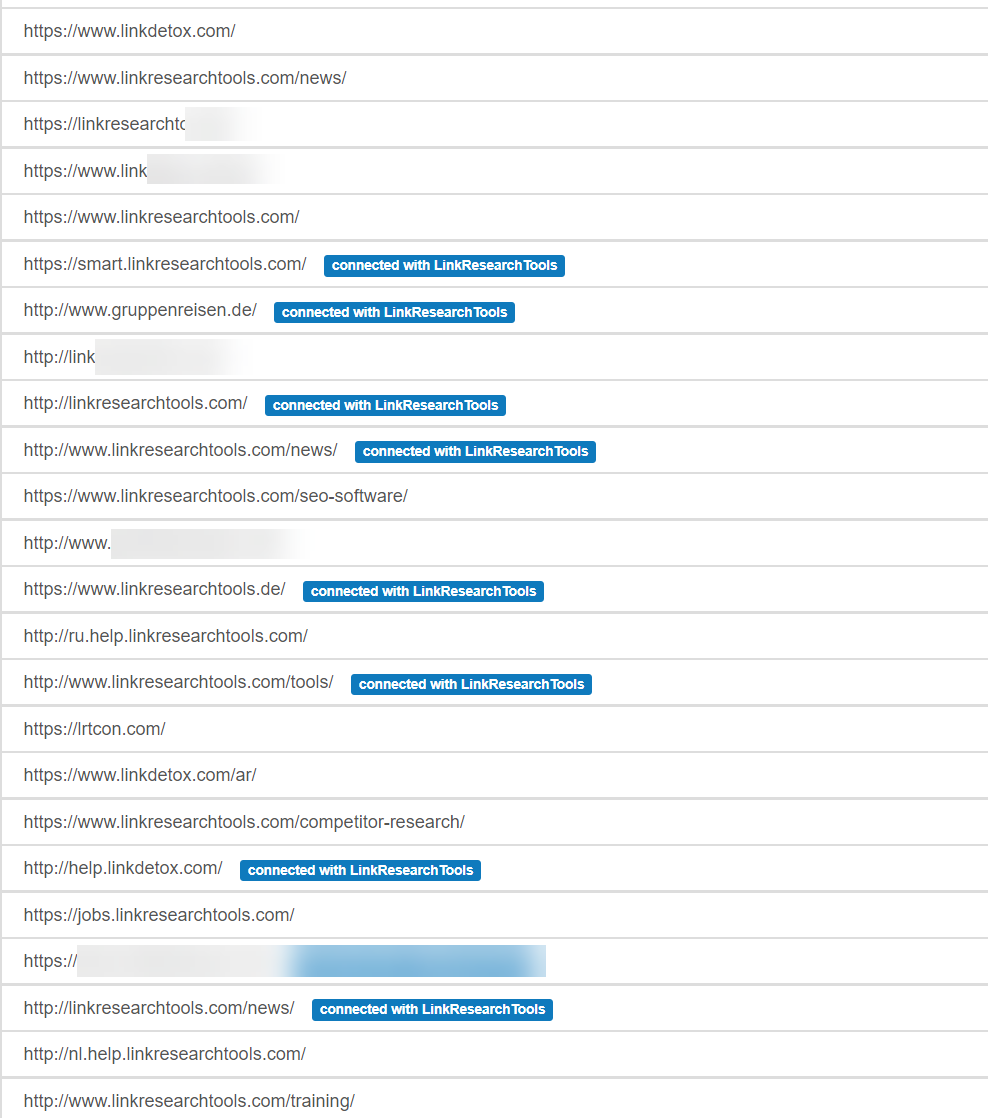

- The ideal setup in LinkResearchTools to get the maximum from Google Search Console would therefore, still, be

- to connect all your properties - the more the better

- Set the recrawl frequency to weekly in your Link Crawl Budget plan.

- By this make sure we fetch and aggregate all data and verify the links by recrawling.

Since we tweaked and tuned the link import Google Search Console since 2014, we can make sure that you don't have to jump through the hoops and loops with LRT, as it seemed from the beginning.

If you have been ignoring the GSC API connection that LRT until today, then you hopefully realized what you're missing out if you don't use it.

Christoph C. Cemper